Here i will describe how to install the code llama modell on one of your VM’s. Problem here is that you usually loose the GPU support because MS is still not able to provide GPU-P for Hyper-V. TY MS at this point.

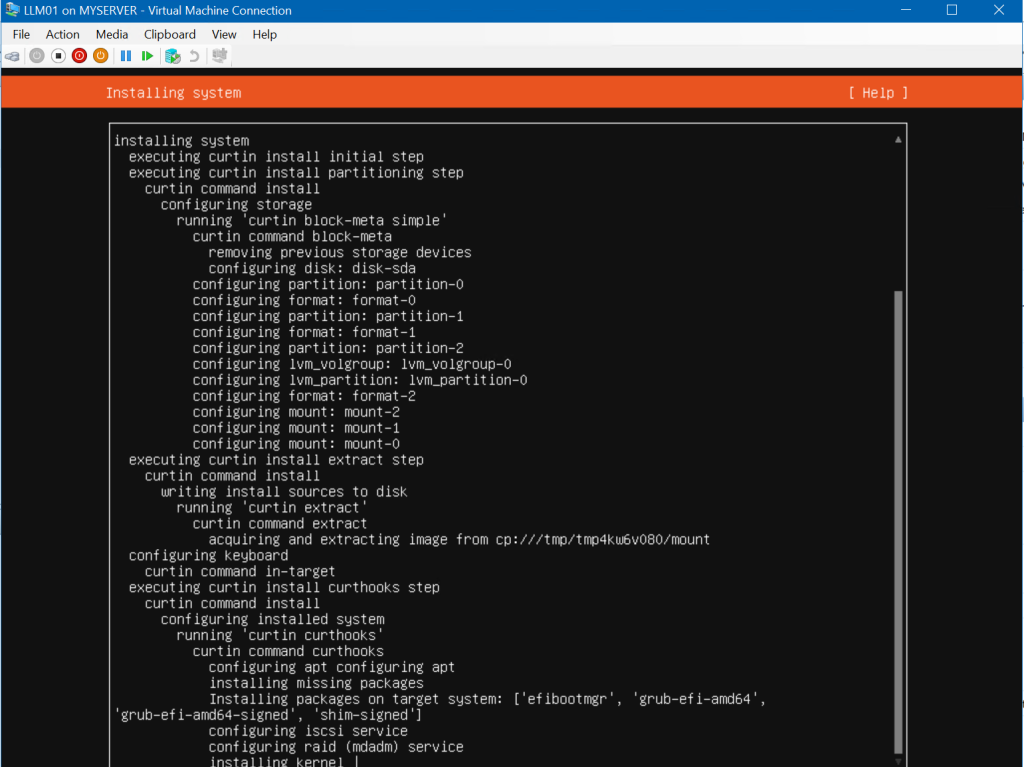

First prepare a vm, I’m choosing ubuntu srv 22.04.03 LTS. Assigning 8 cores and 64GB of RAM.

Check if git is installed (insert “git –version” in the terminal):

Clone the llama.cpp project, insert the following commands in the terminal (“make” w/o anything is cpu only, you may need to install the gcc compiler):

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

sudo apt install build-essential //gcc compiler

make

Downloading the model can be done with a nice script:

bash <(curl -sSL https://g.bodaay.io/hfd) -m TheBloke/CodeLlama-70B-hf-GGUF --concurrent 8 --storage models/After downloading the modell, we can start llama.cpp.

./server \

--model ./models/TheBloke_CodeLlama-70B-hf-GGUF/codellama-70b-hf.Q5_K_M.gguf --host 192.168.50.13 --port 8080 -c 2048

Next step would be to create a plugin for VS e.g. to autofill code from commentblock.